AI in Children's Residential Homes

Using AI in residential children's homes? Here's what Ofsted wants you to know.

If you're running a residential children's home, AI isn't some distant concern for tech companies to worry about. It's already in your building. Your night staff are using ChatGPT to help write incident reports. Young people are using AI apps on their phones. And someone on your team has probably asked an AI tool to help draft a behaviour support plan or summarise a particularly complex shift.

For residential settings, where you're responsible for children 24/7 and documentation standards are rigorous, you need to understand what this means.

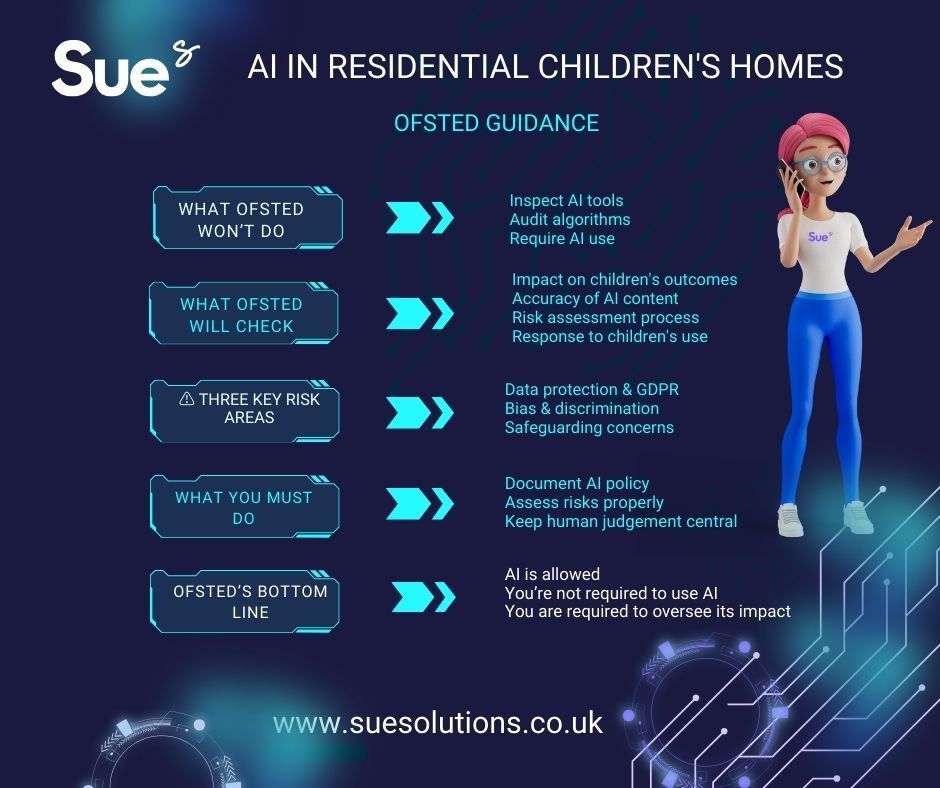

What Ofsted actually cares about (and what they don't)

Ofsted won't be inspecting your AI tools. They're not going to audit your software, assess your algorithms, or mark you down because someone's using ChatGPT instead of some expensive enterprise system. What they will scrutinise is the impact AI has on the children in your care. As

Ofsted states in their October 2025 guidance, inspectors can consider how the use of AI affects the outcomes and experiences of children and learners, but AI itself isn't a standalone inspection criterion. Ofsted doesn't inspect your filing cabinets, but they absolutely care whether important safeguarding information gets lost or mishandled. Same principle applies to AI.

How AI is actually being used in residential settings right now

Staff are using AI for admin tasks

Night staff are asking ChatGPT to help structure incident reports after a difficult shift. Support workers are using AI to help write daily logs when they're running behind. Senior staff are experimenting with AI to draft care plan reviews or summarise weekly team meetings.

Children are using AI constantly

Young people have AI apps on their phones. They're using it for homework, creating images, having conversations with chatbots, and sometimes using it in ways that are concerning – generating inappropriate content, seeking advice on harmful topics, or creating deepfakes of other children.

Some care management systems are building AI features

Auto-complete for incident reports. Suggested wording for behaviour records. Pattern recognition in incident data. Risk prediction algorithms. Ofsted acknowledges that some children or staff members are likely using AI in connection with the care they provide. A 2024 survey by the Alan Turing Institute found that generative AI use is already widespread in the public sector, with over 80% of social care respondents believing GenAI could enhance productivity and reduce bureaucracy. But half of respondents felt there was a lack of clear guidance from employers on how to use these systems, and nearly half were unsure who was accountable for any outputs they used in their work.That's the gap you need to close.

What inspectors will ask about your home

When AI use comes up during your inspection you will be asked whether you've made sensible decisions. The same approach they take with any other tool.

On your record-keeping

If you're using AI to help generate behaviour support plans, daily logs, or incident summaries, inspectors will want to understand how you ensure these are accurate. Who's checking them? What's your process for ensuring nothing critical gets missed or misrepresented? Can you demonstrate that your records reflect what actually happened, not what AI thinks should have happened?

On risk assessment

Inspectors will ask what steps you've taken to ensure your use of AI properly considers risks. Were those risks evaluated when introducing the AI application? Do you have assurance processes to identify emerging risks? Have you considered what happens if AI-generated content contains errors that affect a child's care?

On children's use of AI

Inspectors will explore how you're ensuring that when children use AI – whether for homework or on their phones – this is in the children's best interests. In a residential setting where you're responsible for children around the clock, this takes on additional weight. What's your approach when a young person is using AI to generate self-harm content? Or creating inappropriate images? Or seeking advice about running away?

The specific risks in residential settings

The guidance highlights three risk areas that have particular relevance for residential children's homes:

Data protection

AI tools process large amounts of personal data about vulnerable children. Your existing obligations under GDPR and data protection legislation haven't disappeared. If staff are copying sensitive information about children into ChatGPT or other AI tools, that's a data breach. If your care management software uses AI, you need to understand what happens to that data, where it's processed, and who has access to it.

Bias and discrimination

AI perpetuates biases present in training data. If you're using AI to help assess risk or predict behaviour, you need to understand how it might discriminate against certain children based on their background, ethnicity, or past behaviour. Are you inadvertently creating a system that treats some children as higher risk simply because of patterns in historical data?

Safeguarding

This is the big one for residential settings. Children in care are already vulnerable. They're more likely to be exploited online. They're more susceptible to grooming. AI tools can be weaponised – creating fake images, generating harmful content, facilitating contact with dangerous individuals. You need policies that address this, staff who understand the risks, and systems that protect children without being oppressively restrictive.

What good practice looks like

North Yorkshire Council demonstrates what responsible AI implementation looks like in children's social care. Facing the reality that social workers were dedicating 80% of their time to administrative tasks due to the volume of data they manage, they developed an AI tool that tackles case notes, forms, assessments, and images.

They achieved a 90% reduction in time and cost for some data retrieval tasks, plus the ability to automatically generate eco-maps visualising networks surrounding children and families. But crucially, they didn't just implement and hope. They conducted an AI Ethics Impact Assessment to ensure the tool was used ethically, transparently, and in ways that safeguarded children's wellbeing.

For residential children's homes, good practice means

Clear policies on staff use of AI

What's permitted? What's forbidden? If a night worker uses AI to help write an incident report at 3am after a difficult shift, is that acceptable? Who checks it? What information can never be put into an AI tool?

Robust checking mechanisms

Ofsted's focus is on outcomes, safeguarding and data governance, with human review remaining essential. Every AI-generated piece of content needs human oversight from someone who knows the child and was actually there.

Children's digital safety policies that address AI

Your existing policies on internet safety and mobile phone use need updating. What's your response when a young person shows you an AI-generated image of themselves? Or admits they've been chatting to an AI companion app that's giving them harmful advice?

Understanding what your software actually does

If your care management system has AI features, you need to understand them. What data is being processed? Where? By whom? What happens if it gets something wrong?

What you need to do right now

Document your approach

Write a clear policy on AI use by staff. What's permitted? What isn't? Who's responsible for checking AI-generated content? Make it part of your induction for new staff and bank workers.

Assess the risks properly

Not a tick-box exercise. Actual thinking about data protection implications, potential biases, and safeguarding concerns specific to your home and the children in your care. Consider scenarios: what if a staff member puts a child's personal information into ChatGPT? What if a child uses AI to create images of another child?

Keep human judgement central

AI can help with admin. It can speed up routine tasks. But final decisions about children's welfare, placement stability, behaviour support, and safeguarding must always involve professional judgement from people who know the child. An AI tool might suggest that a child is at high risk of going missing based on patterns in their behaviour logs. But only your staff know whether that child has actually been settling well this week and the historical pattern no longer applies.

Train your team

Make sure staff understand both the opportunities and limitations of AI. They need to know when AI-generated content needs additional scrutiny. This is particularly important for night staff, bank workers, or less experienced team members who might be tempted to rely too heavily on AI-generated incident reports or handover notes because they're not confident in their own writing.

Update your documentation

Your privacy notices need to reflect if you're processing data using AI. Your Statement of Purpose might need updating if AI tools are part of your care delivery. Your children's guide needs age-appropriate information about AI use in the home and by children.

Talk to your software provider

If you're using care management software, ask them directly:

- Do you use AI?

- What for?

- Where is the data processed?

- What checks are in place?

- What happens if it gets something wrong?

If they can't answer these questions clearly, that's a problem.

Where this leaves your home?

Ofsted supports innovation and the use of AI where it improves the education and care of children. They're not anti-technology. But they won't overlook situations where poorly implemented AI creates risks or undermines the quality of care.

The inspection approach is straightforward:

- Make sensible decisions

- Consider the risks

- Keep children's welfare at the centre of everything

If you can demonstrate that your use of AI supports better outcomes for the children in your home, you're fine. If you're not using AI at all, that's also fine. Ofsted does not expect or require providers to use AI in a certain way, or to use it at all. Just be aware that staff and the children in your care are almost certainly using these tools already – and you need a plan for managing that reality responsibly.

The technology isn't going away. The question isn't whether AI will be part of residential children's care, but whether we'll implement it thoughtfully or stumble through it without proper consideration. Ofsted's guidance gives you the framework to get this right.

Need help ensuring your residential care software supports compliant, child focused practice? Book a demo to find out how we support residential children's homes with clear, auditable records and robust oversight that puts children first.